Cognitive Artifacts

SEPTEMBER 17, 2019

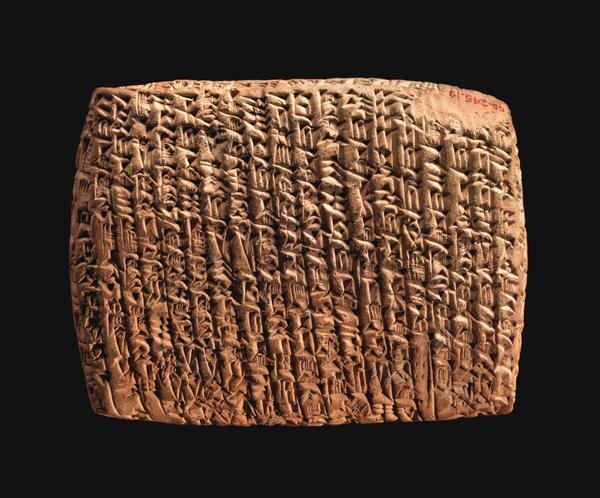

The field of computer science did not begin in earnest until the 1950s, but we humans have been outsourcing our cognition to external objects for centuries. From the alphabet to the abacus to the astrolabe, the tools we've invented have extended our cognitive capacities far beyond the limits of our brains and bodies. These tools don't merely do our thinking for us; many of them can be internalized into our minds, extending the range of possible thoughts we can think. Written language itself is such a tool, as are maps, graphs, and Arabic numerals. These "cognitive artifacts," as cognitive scientist Donald Norman calls them, account for much of our species's unique intelligence.

But not all technologies leave us stronger in their absence. GPS systems help us navigate, but leave our native sense of direction to atrophy. Digital calculators make arithmetic trivially easy, but don't improve our mental math. And modern machine learning systems have tremendous predictive power, but are often opaque even to their makers. The mathematical biologist David Krakauer calls such tools competitive cognitive artifacts: technologies that improve our cognitive power, but leave us worse off without them. He contrasts these with complementary cognitive artifacts, like the abacus or Arabic numerals, which we can internalize, thereby enhancing our independent cognition.

Traditionally, artificial intelligence has been conceived in competitive terms. Indeed, the explicit goal of the field -- artificial general intelligence, defined as the capacity to outperform humans on all cognitive tasks -- would, if realized, amount to our obsolescence. But there is another possible goal, articulated in the 1960s by computer science pioneers like Douglas Engelbart. Engelbart imagined a future in which computers could augment the human intellect, bolstering and broadening our problem-solving abilities. "Rather than outsourcing cognition," Shan Carter and Michael Nielsen explain, "[such an approach is] about changing the operations and representations we use to think; it's about changing the substrate of thought itself."

Carter and Nielsen, researchers at Google Brain and Y Combinator respectively, have recently taken up this mantle, exploring the use of AI as a tool for augmenting human intelligence. Intimations of this possibility can already be seen, they argue, in the properties of certain computer interfaces. Mastering Photoshop, for example, entails internalizing many key principles of image manipulation. "In a similar way to language, maps, etc., a computer interface can be a cognitive technology," Nielsen writes. "A sufficiently imaginative interface designer can invent entirely new elements of cognition." The salient aspects of the interface become efficient mental representations of the problem at hand, allowing us to grasp it with our limited computational resources.

Many of those we regard as geniuses, particularly in math and science, achieve their brilliance in part through such efficient representations. If key aspects of these representations could be embodied into the media of explanation, we might dramatically expand access to technical knowledge. As a proof of concept, Nielsen and Khan Academy researcher Andy Matuschak have written a quantum computing guide in a new "mnemonic medium" that helps readers effortlessly remember key concepts as they progress. But these efforts are only the beginning. In the long run, new media don't just make thinking easier; they can can become new cognitive artifacts, expanding what it's possible to think.